In our upcoming blog post, ‘Mastering Caching Strategies: A Comprehensive Guide’, we delve into the world of caching, a crucial technique used to store and retrieve data quickly and efficiently. We start by exploring the basics of caching, its importance in software engineering, and the various types of caching. We then compare different caching strategies, discussing their pros and cons, and providing guidance on when to use each strategy. The post also includes a detailed comparison between in-memory caching and distributed caching, two popular caching methods. Furthermore, we highlight the importance of monitoring caching performance, discussing the tools that can be used, how to measure caching performance, and how to interpret caching performance metrics. Finally, we discuss common pitfalls in caching and provide strategies to avoid them, along with best practices for caching. This blog post is a must-read for anyone looking to optimize software performance using effective caching strategies.

Introduction

Caching is a fundamental concept in software engineering that plays a vital role in enhancing the performance and scalability of applications. In computing, caching is a technique used to store and retrieve data quickly and efficiently. It involves storing a subset of data in a high-speed storage layer, such as RAM, so that future requests for that data can be served faster than accessing the data’s primary storage location.

How Caching Works

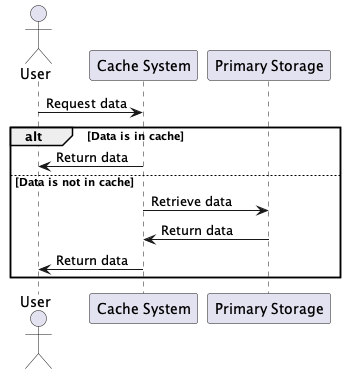

Caching works by storing frequently accessed data in a high-speed storage layer, such as RAM, so that future requests for that data can be served faster. Here is a general overview of how caching works:

-

When a request is made for data, the caching system checks if the data is already stored in the cache.

-

If the data is found in the cache, it is returned quickly without the need to access the slower primary storage.

-

If the data is not found in the cache, it is retrieved from the primary storage and stored in the cache for future requests.

-

The caching system may use various strategies to determine which data to evict from the cache when it reaches its capacity.

-

Caching systems often employ techniques such as expiration times or cache invalidation mechanisms to ensure that the data in the cache remains up to date and accurate.

By storing frequently accessed data in a cache, applications can reduce the latency and load on backend systems, resulting in improved performance and scalability.

Why We Need Caching

Caching is crucial in software engineering for several reasons:

-

Improved Performance: By serving data from a faster cache, we can significantly reduce the need to access slower data storage systems such as databases or disk-based storage. This leads to faster response times and improved overall application performance.

-

Scalability: Caching can help handle increased traffic and load on an application. By serving frequently accessed data from cache, we can minimize the load on databases and servers, allowing the application to scale more effectively.

-

Cost Efficiency: By providing faster data access, caching reduces the need for expensive hardware or infrastructure resources. This can result in cost savings by reducing the need for additional database instances or disk-based storage.

-

Reducing Latency: Caching allows for faster retrieval of data, reducing the latency associated with accessing slower storage systems. This is particularly important for applications with real-time requirements, such as gaming or financial systems.

-

Managing Spikes in Demand: Caching can help mitigate the impact of spikes in demand by serving cached data, reducing the load on backend systems and improving overall application stability.

Types of Caching

There are several types of caching used in software engineering:

-

Database Caching: This involves caching frequently accessed data from a database in a high-speed cache, reducing the need to query the database for every request.

-

Content Delivery Network (CDN) Caching: CDNs use caching to store and serve static content, such as images, videos, and webpages, from edge locations closer to the end users, reducing latency and improving performance.

-

Web Caching: Web caching involves caching web content, such as HTML, JavaScript, and image files, to reduce the load on web servers and improve response times for users.

-

Session Caching: Session caching involves caching user session data to provide a consistent user experience across multiple requests or sessions.

-

API Caching: API caching involves caching API responses to improve performance and reduce load on backend systems.

-

In-memory Caching: In-memory caching stores data in fast access hardware, such as RAM, for quick retrieval and improved performance. This type of caching is often used for high-traffic applications or computationally intensive workloads.

How Caching Works

Caching works by storing frequently accessed data in a high-speed storage layer, such as RAM, so that future requests for that data can be served faster. Here is a general overview of how caching works:

-

Data Request: When a request is made for data, the caching system checks if the data is already stored in the cache.

-

Cache Hit: If the data is found in the cache, it is returned quickly without the need to access the slower primary storage. This is known as a cache hit.

-

Cache Miss: If the data is not found in the cache, it is retrieved from the primary storage and stored in the cache for future requests. This is known as a cache miss.

-

Cache Eviction: The caching system may use various strategies to determine which data to evict from the cache when it reaches its capacity.

-

Cache Invalidation: Caching systems often employ techniques such as expiration times or cache invalidation mechanisms to ensure that the data in the cache remains up to date and accurate.

By storing frequently accessed data in a cache, applications can reduce the latency and load on backend systems, resulting in improved performance and scalability.

Understanding these basics of caching is crucial as it sets the foundation for the following sections where we will explore different caching strategies, compare in-memory caching and distributed caching, discuss how to monitor and measure caching performance, and explore some common pitfalls in caching and how to avoid them.

Comparing Caching Strategies

Caching strategies are the methods and techniques used to manage how data is stored and retrieved from a cache. Different caching strategies can be used depending on the specific requirements and characteristics of the system. In this section, we will look at several common caching strategies, their pros and cons, and when to use each strategy.

Cache-Aside

The Cache-Aside strategy, also known as Lazy Loading, involves loading data into the cache only when it’s needed. When a request for data is made, the system first checks the cache. If the data is found in the cache (a cache hit), it is returned immediately. If the data is not found in the cache (a cache miss), the data is retrieved from the primary storage, stored in the cache, and then returned.

Pros

- General Purpose: The Cache-Aside strategy can be used in a variety of scenarios, making it a versatile choice for many applications.

- Resilient to Cache Failures: Since data is loaded into the cache only when needed, this strategy is resilient to cache failures.

Cons

- Potential Data Inconsistency: If the data in the primary storage changes after it has been cached, the cache may return stale data.

Read-Through Cache

The Read-Through Cache strategy involves using a cache as the main point of data access. When a request for data is made, the cache is checked first. If the data is not found in the cache, the cache itself is responsible for retrieving the data from the primary storage and storing it in the cache before returning it.

Pros

- Good for Read-Heavy Workloads: The Read-Through Cache strategy is beneficial for read-heavy workloads, as it ensures that all data reads go through the cache.

- Supports Lazy Loading: Like the Cache-Aside strategy, the Read-Through Cache strategy supports lazy loading of data.

Cons

- Potential Data Inconsistency: Similar to the Cache-Aside strategy, the Read-Through Cache strategy can lead to data inconsistency if the data in the primary storage changes after it has been cached.

Write-Through Cache

The Write-Through Cache strategy involves writing data to the cache and the primary storage location at the same time. When a request to write data is made, the data is written to the cache and the primary storage. This ensures that the cache always contains the most up-to-date data.

Pros

- Ensures Data Consistency: The Write-Through Cache strategy ensures that the cache and the primary storage are always in sync, providing data consistency.

- Works Well with Read-Through Cache: The Write-Through Cache strategy can be used in conjunction with the Read-Through Cache strategy to ensure data consistency.

Cons

- Introduces Extra Write Latency: Since data is written to the cache and the primary storage at the same time, the Write-Through Cache strategy can introduce extra write latency.

Write-Around Cache

The Write-Around Cache strategy involves writing data directly to the primary storage, bypassing the cache. This strategy is beneficial for write-once, read-less-frequently scenarios, as it prevents the cache from being filled with write data that may not be read.

Pros

- Good for Write-Once, Read-Less-Frequently Scenarios: The Write-Around Cache strategy is beneficial for scenarios where data is written once and read infrequently.

Cons

- Cache Misses for Read Operations: Since data is written directly to the primary storage, bypassing the cache, this strategy can lead to cache misses for read operations.

Write-Back Cache

The Write-Back Cache strategy involves writing data to the cache and marking the cache entry as dirty. The data is then written to the primary storage at a later time. This strategy improves write performance by reducing the number of write operations to the primary storage.

Pros

- Improves Write Performance: The Write-Back Cache strategy improves write performance by reducing the number of write operations to the primary storage.

- Good for Write-Heavy Workloads: The Write-Back Cache strategy is beneficial for write-heavy workloads.

Cons

- Potential Data Loss: If the cache fails before the dirty entries are written to the primary storage, data loss can occur.

When to Use Each Caching Strategy

The choice of caching strategy depends on the specific requirements and access patterns of the system:

- Cache-Aside and Read-Through Cache are suitable for read-heavy workloads.

- Write-Through Cache is useful when data consistency is important.

- Write-Around Cache is appropriate for write-once, read-less-frequently scenarios.

- Write-Back Cache is beneficial for write-heavy workloads.

By understanding the different caching strategies, their pros and cons, and when to use each strategy, you can make informed decisions to optimize your caching system and improve the performance of your applications.

In-memory Caching vs Distributed Caching

In-memory caching and distributed caching are two commonly used caching strategies that can significantly improve the performance of an application. While both strategies aim to reduce the latency of data retrieval, they differ in their implementation and use cases. In this section, we will delve into a detailed comparison between in-memory caching and distributed caching, their differences, and their respective advantages and disadvantages.

In-memory Caching

In-memory caching is a technique where frequently accessed data is stored in the computer’s main memory (RAM) for faster retrieval. Since RAM is much faster than disk-based storage, in-memory caching can significantly improve the performance of an application.

Advantages of In-memory Caching

- Faster Data Retrieval: As data is stored in RAM, in-memory caching provides faster access and low latency.

- Improved Application Performance: By reducing the need to access slower data storage systems, in-memory caching can significantly improve application performance.

- Reduced Load on Backend Systems: In-memory caching can reduce the load on backend datastores, improving their performance and longevity.

Disadvantages of In-memory Caching

- Limited Storage Capacity: The amount of data that can be stored in-memory is limited by the amount of RAM available.

- Data Loss on System Failure or Restart: In-memory caches are typically not persistent. This means that if the system crashes or restarts, any data stored in the cache will be lost.

- Cost: RAM is more expensive than disk-based storage. Therefore, in-memory caching can be more costly, especially for large datasets.

Distributed Caching

Distributed caching involves storing cached data across multiple nodes or servers in a network. This strategy improves the scalability and availability of the cache, as it can handle more data and requests than a single in-memory cache.

Advantages of Distributed Caching

- Scalability: Distributed caching allows for greater storage capacity and improved scalability, as data is distributed across multiple nodes.

- High Availability and Fault Tolerance: If one node fails, the data is still available on other nodes. This makes distributed caching highly available and fault-tolerant.

- Consistency: Distributed caching solutions often provide consistency mechanisms to ensure that all nodes have the same view of the cached data.

Disadvantages of Distributed Caching

- Increased Complexity: Managing a distributed cache can be more complex than managing an in-memory cache. This includes dealing with issues like data consistency, partitioning, and replication.

- Network Overhead: In a distributed cache, data must be transmitted over the network, which can introduce latency and increase the load on the network.

- Data Consistency Challenges: Ensuring data consistency across all nodes in a distributed cache can be challenging and may require additional mechanisms or protocols.

Conclusion

In-memory caching and distributed caching each have their own advantages and disadvantages. The choice between the two will depend on the specific requirements of your application. If your application requires fast data access and you have a limited amount of data, in-memory caching may be the best choice. However, if your application needs to handle large amounts of data and requires high availability and fault tolerance, distributed caching may be a better option.

In the next section, we will discuss how to monitor and measure the performance of different caching strategies.

Monitoring and Measuring Caching Performance

Monitoring and measuring the performance of your caching system is crucial for maintaining the efficiency and effectiveness of your application. In this section, we will explore why it’s important to monitor caching performance, what tools can be used, how to measure caching performance, and how to interpret caching performance metrics.

Why It’s Important to Monitor Caching Performance

Monitoring caching performance is important for several reasons:

-

Identify Performance Bottlenecks: By monitoring caching performance, you can identify any bottlenecks that may be affecting the performance of your caching system. This can help you take proactive measures to optimize your caching performance and improve the overall user experience.

-

Ensure Optimal Cache Utilization: Monitoring cache utilization can help you ensure that your cache is being used effectively. This can help you make informed decisions about cache size, eviction policies, and other cache configurations.

-

Detect and Resolve Issues: Regular monitoring can help you detect and resolve issues before they impact your application’s performance. This includes issues like cache thrashing, high eviction rates, or cache misses.

-

Optimize Resource Usage: By monitoring cache performance, you can optimize resource usage. This can help you reduce costs and improve the efficiency of your application.

Tools for Monitoring Caching Performance

There are several tools available for monitoring caching performance:

-

Intel Performance Counter Monitor (PCM): This tool provides sample C++ routines and utilities to estimate the internal resource utilization of Intel processors, including cache performance metrics.

-

Perf: A powerful performance monitoring tool in Linux that can be used to monitor various aspects of system performance, including cache utilization.

-

Windows Performance Monitor: A built-in tool in Windows that allows you to monitor and analyze system performance, including cache performance metrics.

-

Datadog: A comprehensive monitoring solution that provides real-time monitoring, alerting, and visualization of caching metrics.

How to Measure Caching Performance

Caching performance can be measured using various metrics:

-

Cache Hit Rate: The percentage of cache accesses that result in a hit (i.e., the data is found in the cache). A high cache hit rate indicates efficient cache utilization.

-

Cache Miss Rate: The percentage of cache accesses that result in a miss (i.e., the data is not found in the cache and needs to be fetched from the primary storage). A high cache miss rate can indicate poor cache utilization.

-

Cache Latency: The time it takes to access data from the cache. Low cache latency indicates fast access to cache data.

-

Cache Coherence: The consistency of data across multiple caches in a multi-core system. High cache coherence is desirable for data consistency and synchronization.

These metrics can be measured using performance monitoring tools like Intel PCM, Perf, Windows Performance Monitor, and Datadog.

Interpreting Caching Performance Metrics

Interpreting caching performance metrics requires understanding the specific metrics being measured and their impact on system performance:

-

Cache Hit Rate: A high cache hit rate indicates that the cache is effectively serving requests from the cache without needing to fetch data from the backend. This is desirable as it leads to faster response times and lower load on the backend systems.

-

Cache Miss Rate: A high cache miss rate may indicate that the cache is not effectively storing frequently accessed data. This can lead to increased load on the backend systems and slower response times. In this case, you may need to optimize your caching strategy or increase your cache size.

-

Cache Latency: Low cache latency indicates fast access to cache data, which can improve overall system performance. High cache latency, on the other hand, can lead to slower response times and may indicate a need for optimization.

-

Cache Coherence: High cache coherence indicates that the data in the cache is consistent across all cores in a multi-core system. Low cache coherence may indicate potential data inconsistency issues.

By interpreting these metrics and comparing them to desired thresholds or benchmarks, you can identify areas for improvement and take appropriate actions to optimize caching performance.

In the next section, we will explore some common pitfalls in caching and how to avoid them.

Pitfalls of Caching and How to Avoid Them

Caching, while beneficial, is not without its challenges. It’s important to understand and be aware of these potential pitfalls so that you can take steps to avoid them. In this section, we will discuss some common pitfalls in caching and provide strategies on how to avoid them.

Common Pitfalls in Caching

Here are some common pitfalls that you might encounter when implementing caching:

-

Data Inconsistency: One of the most common pitfalls in caching is data inconsistency. This occurs when the data in the cache becomes stale or out-of-date compared to the data in the primary storage.

-

Cache Invalidation: Cache invalidation is the process of removing entries from the cache when they are no longer valid. It can be challenging to implement an effective cache invalidation strategy that ensures the cache always contains the most up-to-date data.

-

Cache Expiration: Setting an appropriate expiration time for cached data is crucial. If the expiration time is set too short, data may be evicted from the cache too quickly, leading to increased cache misses. If the expiration time is set too long, the cache may serve stale data.

-

Cache Security and Authorization: If not properly managed, caches can pose a security risk. Sensitive data stored in the cache could potentially be accessed by unauthorized users.

Strategies to Avoid Caching Pitfalls

Despite these challenges, there are strategies that you can implement to avoid these pitfalls:

-

Use Appropriate Cache Invalidation Strategies: Implementing appropriate cache invalidation strategies can help ensure that your cache always contains the most up-to-date data. This could involve invalidating cache entries when the data changes, or using a time-to-live (TTL) strategy to automatically invalidate cache entries after a certain period of time.

-

Set Appropriate Cache Expiration Times: Setting appropriate cache expiration times can help balance the trade-off between serving stale data and evicting data from the cache too quickly. The optimal cache expiration time will depend on the specific requirements of your application and how frequently the data changes.

-

Implement Cache Security Measures: Implementing cache security measures can help protect sensitive data. This could involve encrypting data before storing it in the cache, or using access control mechanisms to restrict who can access the cache.

-

Monitor Cache Performance: Regularly monitoring cache performance can help you identify and address any issues before they impact your application’s performance. This could involve tracking metrics like cache hit rate, cache miss rate, and cache latency.

Best Practices in Caching

Here are some best practices to follow when implementing caching:

-

Understand Your Application’s Data Access Patterns: Understanding how your application accesses data can help you choose the most appropriate caching strategy. For example, if your application frequently reads the same data, a read-through cache might be beneficial.

-

Use the Right Type of Cache: Different types of caches are suited to different use cases. For example, in-memory caches can provide fast access to small amounts of data, while distributed caches can provide scalable and fault-tolerant storage for larger data sets.

-

Regularly Monitor and Optimize Cache Performance: Regularly monitoring and optimizing cache performance can help ensure that your cache is effectively improving your application’s performance. This could involve tracking cache performance metrics and adjusting cache configurations as needed.

-

Test Your Cache: Testing your cache can help you identify any issues and ensure that your cache is working as expected. This could involve testing how your cache handles different workloads, or testing how your cache recovers from failures.

By understanding these common pitfalls and implementing these strategies and best practices, you can effectively use caching to improve the performance and scalability of your applications.

Conclusion

Understanding the fundamentals of caching and the various caching strategies is crucial in software engineering. Caching plays a vital role in enhancing the performance, scalability, and cost-efficiency of applications. By storing frequently accessed data in a high-speed storage layer, caching reduces the need to access slower data storage systems, leading to faster response times and improved user experience.

Different caching strategies such as Cache-Aside, Read-Through Cache, Write-Through Cache, Write-Around Cache, and Write-Back Cache have their own advantages and trade-offs. Choosing the right strategy depends on the specific requirements and access patterns of your system. Moreover, understanding the difference between in-memory caching and distributed caching can help you make an informed decision based on your application’s needs.

However, implementing caching is not without its challenges. Common pitfalls such as data inconsistency, cache invalidation issues, and security concerns need to be carefully managed. Implementing appropriate cache invalidation strategies, setting suitable cache expiration times, implementing cache security measures, and regularly monitoring cache performance are some strategies to avoid these pitfalls.

Lastly, monitoring and measuring caching performance is a key aspect of maintaining an efficient caching system. Regular monitoring can help identify performance bottlenecks, ensure optimal cache utilization, detect and resolve issues, and optimize resource usage.

In conclusion, caching is a powerful technique that can significantly improve the performance and scalability of your applications. However, it requires a deep understanding of caching strategies, continuous monitoring, and regular optimization to ensure its effectiveness. By understanding these aspects, you can effectively use caching to enhance your application’s performance, scalability, and user experience.

References

- Introduction to Caching - AWS

- Caching in Laravel - Honeybadger

- Intro to Caching with Redis - Redis

- Comparing Caching Strategies - Codeahoy

- Comparing Caching Strategies - JSTOR

- Comparing Caching Strategies - IEEE Xplore

- Caching: In-memory Grid - Vedcraft

- Distributed Caching - Microsoft

- In-memory or Distributed Output Cache - Progress

- Monitoring Cache Performance with Intel Performance Counter Monitor (PCM) - Intel

- Monitoring Cache Performance - Microsoft

- Monitoring ElastiCache Performance Metrics with Redis or Memcached - Datadog

- What are some common API caching pitfalls and how to avoid them - LinkedIn

- Micro Frontend Architecture: Pitfalls - SitePoint

- Keep Your Sites Running: 10 Common ASP.NET Pitfalls to Avoid - Microsoft

- Save Money on Serverless: Common Costly Mistakes and How to Avoid Them - Lumigo