Knative is a powerful open-source platform that simplifies the development and deployment of serverless applications on Kubernetes. This blog post provides a comprehensive overview of Knative, covering its key technical aspects and how it can be used to build and manage modern, scalable, and reliable serverless applications.

In the first section, we introduce Knative and its role in the evolution of serverless architecture. We also discuss how Knative extends Kubernetes and provides a modular and scalable platform for building and deploying serverless applications.

The second section dives into Knative Serving, focusing on its architecture and how it handles incoming requests. We discuss the role of the Activator, Autoscaler, and Controller components in managing the lifecycle of Knative services. We also explore the Queue-Proxy and its impact on request handling, as well as traffic flow management from the Ingress Gateway to Pods.

The third section explores Knative Eventing, a powerful component that enables event-driven architectures. We discuss event sources and triggers, and how they can be used to set up event-driven systems. We also cover the Broker and Channel components, which provide routing and filtering capabilities for events. Finally, we discuss Subscriptions and Delivery, ensuring reliable event processing.

The fourth section focuses on Knative Build, a component that streamlines the process of building container images from source code. We discuss custom build templates and build pipelines, and how they can be integrated with CI/CD systems for automated workflows. We also explore the advantages of using Knative Build for building and deploying containerized applications.

The fifth and final section discusses the various techniques and features provided by Knative to ensure the reliability and resilience of serverless applications. We cover health checks and readiness probes, which are essential for monitoring the health of Knative services. We also explore autoscaling, which allows Knative to automatically scale services based on traffic and custom metrics. Finally, we discuss resilience patterns such as retries, timeouts, and circuit breakers, which can help mitigate failures and ensure the availability of Knative services.

Introduction to Knative

In this blog, we delve into Knative, a pivotal component in the serverless computing paradigm that builds upon and extends Kubernetes. We explore its role in the evolution of serverless architectures, its foundational concepts, and its core components, such as the Activator, Autoscaler, Controller, Queue-Proxy, and Ingress Gateway.

Serverless computing has revolutionized application development by abstracting server management and allowing developers to focus solely on writing code. Knative, emerging as a cornerstone in this domain, facilitates the deployment and scaling of applications without the complexity of managing server infrastructure.

Knative enriches Kubernetes with additional abstractions that are tailored for serverless workloads. It introduces Custom Resource Definitions (CRDs) that encapsulate serverless functionalities, enabling developers to interact with serverless components using Kubernetes-native tooling.

Through a comprehensive understanding of these components and their interplay, developers can harness Knative to create, deploy, and manage serverless applications with greater agility and efficiency.

In-Depth Knative Serving

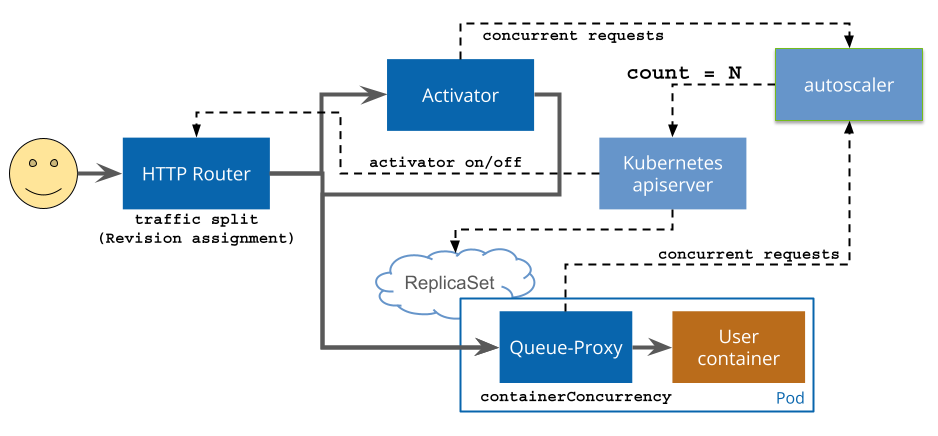

Delve into the intricate architecture of Knative Serving and its sophisticated mechanism for handling incoming requests. We dissect the roles of the Activator, Autoscaler, and Controller components in orchestrating the lifecycle of Knative services. Additionally, we scrutinize the Queue-Proxy’s influence on request processing and the meticulous management of traffic flow from the Ingress Gateway to the Pods.

Activator, Autoscaler, and Controller: Orchestrating Service Management

The Activator, Autoscaler, and Controller are pivotal in managing the lifecycle of Knative services, each playing a distinct yet interconnected role.

- Activator: The Activator is the linchpin in traffic management, adeptly directing incoming requests to the appropriate service instance. It acts as a buffer, ensuring that requests are seamlessly handled, even when the target instance is dormant, effectively waking it up as needed.

- Autoscaler: The Autoscaler is the dynamic force behind resource optimization. It meticulously monitors traffic patterns, employing sophisticated algorithms to scale the number of pods in real-time, thus aligning service capacity with fluctuating demand and ensuring cost-effective resource utilization.

- Controller: The Controller is the central command, diligently overseeing the creation, updates, and deletion of services. It is the guardian of the desired state, ensuring that services are meticulously configured and deployed as specified by the developer.

Queue-Proxy: Managing Request Handling

Embedded within each pod, the Queue-Proxy is instrumental in managing the flow and processing of incoming requests.

- Request Queuing: It acts as a gatekeeper, queuing incoming requests during spikes in traffic, thereby guaranteeing a systematic and equitable handling process.

- Concurrency Control: The Queue-Proxy is the enforcer of concurrency limits, safeguarding the service from being inundated by an onslaught of simultaneous requests.

- Load Balancing: It is the orchestrator of load distribution, astutely apportioning requests across the fleet of service instances to optimize resource use and minimize response times.

Traffic Flow Management: Ingress Gateway to Pods

The Ingress Gateway is the conduit for all incoming traffic to Knative services, deftly managing the flow from the external world to the internal mechanics of the service.

- Ingress Gateway: It stands as the sentinel, receiving and scrutinizing incoming requests, applying intricate routing rules to discern the destination service.

- Service: Upon determining the target, the Ingress Gateway defers the request to the designated service, a logical construct that encapsulates a set of pods running the uniform container image.

- Pods: The service then delegates the request to one of its constituent pods, which executes the task at hand and relays the response back to the requester.

Grasping the synergy of these components empowers developers to adeptly manage Knative services, enhancing request handling for superior performance and scalability.

Advanced Knative Eventing

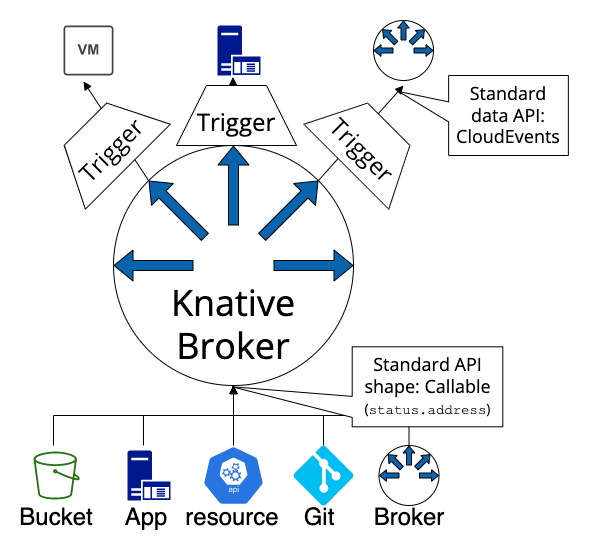

Knative Eventing, an integral component that facilitates event-driven architectures within Kubernetes environments. By leveraging Knative Eventing, developers can design systems that react to a variety of events, streamlining processes and improving responsiveness.

Event Sources and Triggers

Event Sources are pivotal in event-driven systems, acting as the originators of events. These sources can vary widely, from Kubernetes resource changes to external webhook events. Knative Eventing supports several event sources out-of-the-box, such as:

- ApiserverSource: Watches for Kubernetes resource state changes and forwards those events to a configured sink.

- GitHubSource: Listens for GitHub webhook events (e.g., push, pull requests) and relays them to a sink for processing.

- SinkBinding: Connects arbitrary Kubernetes resources to a sink, enabling custom event sources.

Triggers facilitate the dynamic routing of events from sources to Knative services or other destinations. They act upon criteria specified in their configuration to ensure that only relevant events prompt action.

Broker and Channel

The Broker and Channel components are the backbone of event routing and filtering within Knative Eventing:

- Broker: Serves as an entry point for events into the eventing mesh and dispatches them to interested consumers.

- Channel: Provides a durable storage mechanism for events and applies filtering rules to route them appropriately.

Subscriptions and Delivery

Subscriptions create a binding between a Channel and a consumer, ensuring that events are delivered to the correct destination.

Delivery mechanisms in Knative Eventing ensure that events reach their intended recipients reliably. Supported delivery methods include:

- HTTP: Utilizes HTTP POST requests to transmit events to services.

- In-cluster: Facilitates event delivery to services within the same Kubernetes cluster, optimizing for low latency and high throughput.

Knative Build Techniques

Knative Build, a key component of the Knative ecosystem designed to streamline the creation of container images from source code. We’ll explore its core features, such as custom build templates and build pipelines, and examine how they integrate with CI/CD systems to facilitate automated workflows. Additionally, we’ll highlight the benefits of employing Knative Build for the construction and deployment of containerized applications.

Custom Build Templates and Build Pipiles

Knative Build empowers developers with the ability to define custom build templates. These templates act as blueprints that outline the necessary steps to construct a container image, including the execution of custom commands, installation of dependencies, and configuration of environment variables. By leveraging these templates, developers can create a repeatable and consistent build process tailored to their application’s requirements.

Build pipelines in Knative Build are a sequence of tasks that orchestrate the build process. They can be configured to run steps either sequentially or in parallel, thus enabling a more complex and nuanced build strategy. These pipelines are particularly useful for managing multi-stage builds where each stage might have different requirements and environments. Pipelines can be triggered by various events, such as a commit push to a version control system or the creation of a new Kubernetes resource, facilitating an automated and responsive build system.

Integration with CI/CD Systems

Knative Build’s architecture is designed to integrate seamlessly with existing CI/CD systems like Jenkins, Travis CI, and GitLab CI/CD. This integration allows for the automatic triggering of builds upon source code changes, which are detected through webhooks. Once a build is completed, the resulting container image can be automatically tested and deployed to a Kubernetes cluster, streamlining the entire software delivery pipeline.

Advantages of Using Knative Build

Utilizing Knative Build presents several advantages:

- Simplified Build Process: It abstracts away the complexities of the build process, offering a declarative syntax to define build steps.

- Efficiency: By caching build artifacts and employing a distributed build system, it reduces build times and enhances resource utilization.

- Scalability: Knative Build scales dynamically to accommodate a high volume of builds, thanks to its distributed architecture.

- Security: It includes robust security features like image scanning and secure build environments to safeguard the build process.

Knative Build stands out as an efficient, scalable, and secure solution for building and deploying containerized applications. Its integration with CI/CD systems and the ability to define custom build processes make it an invaluable tool for developers aiming to streamline their development workflows.

Ensuring Reliability with Knative

In the evolving landscape of serverless computing, Knative stands out by offering robust mechanisms to ensure the reliability and resilience of applications. This section delves into the intricacies of these mechanisms, highlighting how they contribute to maintaining service continuity and performance.

Health Checks and Readiness Probes

Health checks and readiness probes are integral to the Knative ecosystem, serving as the first line of defense in maintaining service health.

- Health checks are automated tests that periodically assess the operational status of a service. If a service fails to pass a health check, it is flagged as unhealthy and rerouted from the traffic flow to prevent service disruption.

- Readiness probes are similar to health checks but are specifically designed to verify if a service is prepared to handle incoming traffic. A service that fails a readiness probe is withheld from the routing pool until it meets the readiness criteria.

Knative’s configuration for these probes is flexible, allowing developers to specify custom endpoints and conditions for liveness and readiness checks within the Service object’s livenessProbe and readinessProbe specifications.

Autoscaling

Knative’s autoscaling capabilities are a cornerstone feature, enabling services to dynamically adjust to varying loads.

- Horizontal autoscaling responds to traffic fluctuations by altering the count of service replicas, thereby aligning service capacity with demand.

- Vertical autoscaling adjusts the allocated compute resources, such as CPU and memory, to match the current load, optimizing resource utilization.

Developers can tailor autoscaling behavior through the autoscaling annotations in the Service object, setting parameters like target utilization thresholds and maximum replica counts.

Resilience Patterns

To bolster service resilience, Knative incorporates patterns that enhance fault tolerance and availability.

- Retries: By automatically retrying failed requests, Knative helps smooth out temporary glitches, ensuring a higher success rate for service calls.

- Timeouts: Implementing timeouts prevents prolonged request processing from straining the system, thus maintaining a responsive service environment.

- Circuit breakers: In scenarios where services exhibit consistent failures, circuit breakers intervene to temporarily disable the affected paths, safeguarding the system from cascading issues.

These resilience strategies can be configured within the resilience section of the Service object, providing granular control over failure handling mechanisms.

Conclusion

Knative’s comprehensive suite of features for ensuring service reliability is pivotal for the success of serverless applications. By leveraging health checks, autoscaling, and resilience patterns, developers can create systems that not only withstand fluctuations and failures but also thrive in the dynamic conditions of cloud-native environments.