Dive into the world of serverless architecture with our comprehensive guide for beginners. This blog post will introduce you to the concept of serverless, its benefits over traditional server-based architectures, and the evolution of serverless architecture. You will learn about the key components of a serverless stack, including serverless databases, triggers, software containers, and API gateways. We will also share best practices in serverless architecture and discuss common pitfalls and challenges. You will discover various use cases of serverless architecture, such as real-time file processing, IoT applications, and event-driven applications. Finally, we will provide a step-by-step guide on how to run serverless applications with Vercel. Whether you are a seasoned developer or a beginner, this guide will equip you with the knowledge and skills to leverage serverless architecture in your projects.

Introduction to Serverless Stack

Serverless computing, or simply serverless, is a revolutionary approach to developing and deploying applications that enable developers to focus on writing code without the need to manage the underlying infrastructure. The term “serverless” doesn’t mean servers are no longer involved, but rather, it means that developers no longer have to think about servers, even though they’re still there. It’s a concept where the cloud provider dynamically manages the allocation and provisioning of servers.

Benefits of Serverless Stack

One of the main benefits of the serverless stack is the reduced operational overhead. It eliminates the need for developers to manage servers and infrastructure, which means they can focus more on writing code and delivering business value.

Another notable advantage is the automatic scalability. In a serverless architecture, the cloud provider is responsible for scaling up or down based on the demand, ensuring that the application can handle any amount of workload without manual intervention.

The pay-per-use pricing model of serverless computing is also a significant benefit. Instead of paying for idle servers, you only pay for the actual compute time consumed. This model can lead to significant cost savings, especially for applications with variable or unpredictable workloads.

Lastly, serverless computing allows for faster time to market. Since developers don’t have to worry about server management, they can get their applications up and running more quickly.

Evolution of Serverless Architecture

Serverless computing has evolved significantly since its inception. The first generation of serverless, or Serverless 1.0, was primarily focused on executing functions in response to events, a model known as Function as a Service (FaaS). AWS Lambda is a classic example of a Serverless 1.0 platform.

However, Serverless 2.0 has expanded the scope beyond just function execution. It includes event-driven architectures, managed databases, and infrastructure-as-code tools. For example, the AWS Serverless Application Model (SAM) is a framework for building serverless applications that encompasses a broader range of services and capabilities.

In summary, serverless computing has come a long way since its early days. It has transformed the way we build and deploy applications, making it easier, faster, and more cost-effective. As serverless continues to evolve and mature, we can expect to see even more innovative use cases and applications in the future.

Basic Concepts of Serverless Architecture

In order to understand serverless architecture, it is important to familiarize ourselves with some key terms and concepts that form the foundation of this technology. These include Function-as-a-Service (FaaS), Backend-as-a-Service (BaaS), cold start, concurrency limit, and timeout.

Function-as-a-Service (FaaS)

Function-as-a-Service (FaaS) is a category of cloud computing services that provides a platform allowing developers to execute code in response to events without the complexity of building and maintaining the infrastructure typically associated with developing and launching a microservices-based application.

In FaaS, developers write their application code as a set of discrete functions, each performing a specific task. The cloud provider then executes these functions on-demand in response to events such as HTTP requests, database updates, or file uploads.

Backend-as-a-Service (BaaS)

Backend-as-a-Service (BaaS) is a model for providing web and mobile app developers with a way to link their applications to backend cloud storage and APIs exposed by back-end applications while also providing features such as user management, push notifications, and integration with social networking services.

BaaS platforms typically offer features such as user authentication, database management, file storage, and push notifications, allowing developers to focus on building the frontend of their applications.

Cold Start

A cold start in serverless computing refers to the situation where a function needs to be executed but there is no existing runtime available to run the function. In this case, the cloud provider needs to set up a new runtime, which includes loading the function code and executing the initialization code. This process takes some time and results in a delay, known as a cold start, before the function can start processing the event.

Concurrency Limit

Concurrency limit in serverless refers to the maximum number of function instances that can run simultaneously in one region. If a function exceeds this limit, it will be throttled. The concurrency limit is an important consideration for handling high traffic and ensuring optimal performance of serverless applications.

Timeout

Timeout in serverless refers to the maximum duration allowed for a function invocation before it is terminated by the serverless platform. It is a mechanism to prevent long-running or infinite loops from consuming excessive resources and affecting the performance of other functions.

To sum up, understanding these basic concepts is crucial for leveraging the full potential of serverless architecture. Serverless computing offers a new way to build and deploy applications, allowing developers to focus on writing code and delivering value without worrying about infrastructure management and scalability.

How Serverless Stack Works

Now that we’ve covered the basics of serverless architecture, let’s delve into how serverless applications actually work. A serverless application is typically composed of individual functions that respond to certain events. These functions are executed in an isolated environment, and the resources are allocated dynamically based on the workload.

Role of Triggers

In serverless architecture, functions are usually triggered by events, which can be anything from an HTTP request to a change in a database. When such an event occurs, the corresponding serverless function is invoked to perform a specific task or process the event data.

For instance, if you have a serverless function designed to process uploaded files, the function could be triggered every time a new file is uploaded. The function would then execute, process the file, and then terminate. This event-driven nature of serverless architecture allows applications to respond to real-time events and perform actions based on those events.

Asynchronous Programming in Serverless

Asynchronous programming plays a key role in serverless architecture. In a serverless environment, where functions are executed in isolated environments, asynchronous programming enables parallel execution of tasks and improves overall system performance.

Asynchronous programming can be achieved using techniques such as callbacks, promises, or async/await syntax. By leveraging asynchronous programming, serverless applications can offload time-consuming tasks to background processes or external services, allowing the main function to continue processing other tasks or respond to other events. This approach enhances the scalability and responsiveness of serverless applications.

Use of RESTful APIs in Serverless

RESTful APIs (Representational State Transfer) are commonly used in serverless applications to expose functionalities and enable communication between different components or services. In a serverless environment, RESTful APIs provide a standardized and stateless approach for clients to interact with serverless functions or services.

These APIs follow the principles of HTTP, using methods such as GET, POST, PUT, DELETE to perform operations on resources. Serverless applications can use RESTful APIs to handle incoming requests, retrieve data from databases, trigger serverless functions, or communicate with external services.

Role of DevOps (CI/CD) in Serverless

DevOps practices, including continuous integration and continuous deployment (CI/CD), play a crucial role in serverless architectures. CI/CD enables developers to automate the building, testing, and deployment of serverless applications, ensuring faster and more reliable release cycles.

In a serverless environment, where functions are deployed as independent units, CI/CD pipelines can be set up to automatically build and package serverless functions, run tests, and deploy them to the cloud provider. This streamlines the development process, reduces human errors, and ensures consistent deployment across different environments.

In conclusion, understanding how serverless applications work is crucial to leveraging the full potential of serverless architecture. By taking advantage of triggers, asynchronous programming, RESTful APIs, and DevOps practices, developers can build highly scalable, efficient, and robust serverless applications.

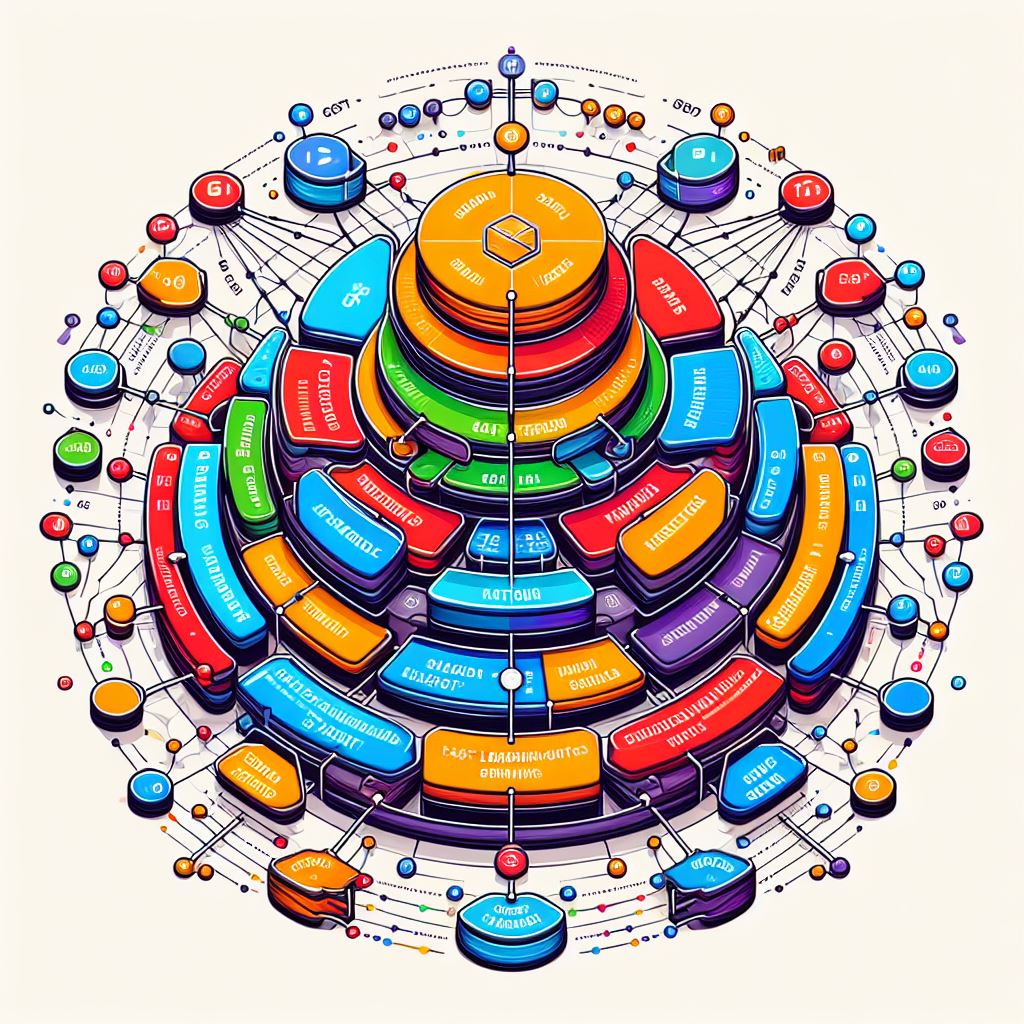

Serverless Stack Components

A serverless stack is composed of several key components that work together to provide a scalable, efficient, and robust platform for building and deploying applications. These components include serverless databases, triggers, software containers, and API gateways.

Serverless Databases

Serverless databases are a crucial component of the serverless stack. They are fully managed by the cloud provider, meaning that developers don’t need to worry about server provisioning, maintenance, or scaling.

These databases automatically scale to handle varying workloads, ensuring high availability and performance. They also provide a pay-per-use pricing model, which means that you only pay for the actual usage of the database, making it a cost-effective solution for applications with variable or unpredictable workloads.

Examples of serverless databases include AWS DynamoDB, Google Cloud Firestore, and Azure Cosmos DB.

Triggers

Triggers play a crucial role in serverless architectures as they are responsible for initiating the execution of serverless functions.

Triggers can be event sources such as HTTP requests, database updates, file uploads, message queues, or scheduled events. When a trigger event occurs, it invokes the corresponding serverless function or service to perform a specific task or process the event data.

Triggers enable the event-driven nature of serverless applications, allowing them to respond to real-time events and perform actions based on those events. They provide flexibility and scalability by decoupling the execution of functions from the underlying infrastructure, making it easy to add or remove triggers without impacting the overall application architecture.

Software Containers

Software containers in serverless refer to the packaging and deployment of serverless functions in containers.

Containers provide a lightweight and isolated runtime environment for the serverless functions, allowing them to run consistently across different platforms and environments. They encapsulate the function code along with its dependencies, ensuring that the function has everything it needs to run correctly.

Containerization in serverless helps to improve the portability of functions, simplify the deployment process, and enhance the scalability and efficiency of the serverless application.

API Gateways

API gateways in serverless architectures act as a front door for serverless functions or applications.

They handle incoming HTTP requests and route them to the appropriate serverless function. API gateways also provide features such as authentication, rate limiting, and request/response transformations.

By using an API gateway, serverless applications can expose their functionalities via a standardized and scalable interface, allowing clients to interact with the application in a secure and controlled manner.

In conclusion, understanding the key components of a serverless stack is crucial to leveraging the full potential of serverless architecture. By taking advantage of serverless databases, triggers, software containers, and API gateways, developers can build highly scalable, efficient, and robust serverless applications.

Best Practices in Serverless Architecture

As we delve deeper into serverless architecture, it’s important to understand the best practices that can help us optimize the performance and efficiency of our serverless applications. These practices include handling cold starts, managing concurrency limits, setting appropriate timeouts, and the importance of self-healing in serverless architecture.

Handling Cold Starts

A cold start in serverless computing refers to the delay that occurs when a function needs to be executed but there is no existing runtime available to run the function. This process takes some time and results in a delay, known as a cold start, before the function can start processing the event.

To mitigate the impact of cold starts, you can adopt several strategies. One approach is to keep your functions warm by scheduling regular invocations. This ensures that there is always a warm instance ready to handle incoming requests.

Another strategy is to allocate more memory to your functions. In many serverless platforms, the amount of CPU power and network bandwidth allocated to a function is proportional to the amount of memory assigned to it. Therefore, increasing the memory allocation can help reduce the cold start latency.

Managing Concurrency Limits

Concurrency in serverless refers to the number of function instances that are executing simultaneously. Managing concurrency is crucial for ensuring the performance and scalability of your serverless applications.

One way to manage concurrency is to set a concurrency limit for each function. This allows you to control the number of simultaneous executions and prevent overloading your system.

Another approach is to use queuing systems or event-driven architectures to manage the flow of requests. This can help ensure smooth execution and prevent throttling when the concurrency limit is reached.

Setting Appropriate Timeouts

Timeout in serverless refers to the maximum duration allowed for a function invocation before it is terminated by the serverless platform. Setting an appropriate timeout is crucial for ensuring efficient execution and preventing long-running functions from consuming excessive resources.

It’s important to set a timeout that allows your function to complete its execution within the allocated time. However, be mindful of setting excessively long timeouts, as this can lead to increased costs and potential resource contention.

Importance of Self-Healing in Serverless

Self-healing is an important aspect of serverless architecture. It refers to the ability of a system to automatically detect and recover from failures without human intervention.

In a serverless environment, self-healing can be achieved through automatic scaling, redundancy, and fault isolation. If a function instance fails, the serverless platform can automatically replace it with a new instance.

Moreover, serverless platforms often provide built-in health checks and monitoring tools that can help detect and address issues promptly. By implementing self-healing mechanisms, you can ensure that your serverless applications remain resilient and available, even in the face of failures.

In conclusion, adopting these best practices in serverless architecture can significantly enhance the performance, scalability, and reliability of your serverless applications. Remember, the key to effective serverless architecture is not just about eliminating servers, but also about adopting practices that optimize the use of serverless functions and services.

Pitfalls and Challenges in Serverless Architecture

While serverless architecture offers many benefits, it also comes with its own set of challenges and pitfalls. Understanding these challenges can help developers navigate the serverless landscape more effectively and build robust, scalable, and efficient applications. Let’s delve into some of the common pitfalls and challenges in serverless architecture, including cold start latency, managing state, and dealing with concurrency limits.

Cold Start Latency

One of the most commonly discussed challenges when working with serverless is known as the “cold start” problem. This refers to the latency that occurs when a serverless function is invoked for the first time or after a period of inactivity. During a cold start, the cloud provider needs to allocate resources and initialize the necessary infrastructure to run the function, which can introduce additional delay.

Cold starts can add several seconds to the code execution time, leading to elevated latency and a diminished end-user experience. This is particularly noticeable in applications that require real-time responses.

Managing State in Serverless

Another challenge in serverless architecture is managing state. Since serverless functions are stateless by design, they do not retain any information between invocations. This can pose a challenge when an application needs to maintain state or share information across multiple function invocations.

To manage state in a serverless environment, developers can design their functions to be self-contained and have all the necessary information internally. Any external state must be fetched at the beginning of invocation and exported before finishing.

Dealing with Concurrency Limits

Concurrency limits can also pose a challenge in serverless architecture. Most cloud providers impose a limit on the number of concurrent function invocations. If this limit is exceeded, additional invocations may be throttled, resulting in slower response times.

To deal with concurrency limits, developers can design their applications to handle asynchronous and event-driven workflows to reduce the need for high concurrency.

In conclusion, while serverless architecture offers many benefits, it’s important to be aware of the potential challenges and pitfalls. By understanding these challenges, developers can make informed decisions and implement strategies to overcome them, ensuring the successful implementation of serverless architecture in their applications.

Use Cases of Serverless Architecture

Serverless architecture has gained significant popularity due to its scalability, cost-effectiveness, and the ability for developers to focus on writing code rather than managing servers. It has found its place in a wide variety of applications and use cases. Let’s explore some of the key use cases where serverless architecture shines, including real-time file processing, IoT applications, and event-driven applications.

Real-Time File Processing

One of the most common use cases of serverless architecture is real-time file processing. In this scenario, a serverless function is triggered whenever a file is uploaded or modified. The function then processes the file in real-time, performing tasks such as data extraction, format conversion, or image resizing.

This approach is particularly useful for applications that need to handle large volumes of files, such as content management systems, data analysis platforms, or multimedia services. With serverless architecture, these applications can process files quickly and efficiently, without the need to manage and scale servers.

IoT Applications

Serverless architecture is also a great fit for Internet of Things (IoT) applications. IoT devices generate a large amount of data and events that need to be processed in real-time. Serverless functions can be used to handle these events, process sensor data, and trigger actions based on the data.

For instance, an IoT device could trigger a serverless function every time it detects a certain condition, such as a temperature threshold being exceeded. The function could then process the event and take appropriate action, such as sending a notification or updating a database.

Event-Driven Applications

Event-driven applications are another common use case for serverless architecture. In these applications, serverless functions are used to respond to events or changes in the system, such as user actions, system notifications, or data updates.

For example, an e-commerce application could use serverless functions to handle events like new orders, inventory updates, or customer notifications. Each event would trigger a specific function, which would then perform the necessary actions.

In conclusion, serverless architecture is a powerful tool that can be used in a wide variety of use cases. Its ability to handle real-time processing, manage IoT data, and respond to events makes it a versatile solution for many modern applications. By leveraging serverless architecture, developers can build scalable, efficient, and robust applications that can handle a wide range of workloads and use cases.

Running Serverless with Vercel

Vercel, formerly known as Zeit, provides a cloud platform for static sites and Serverless Functions that fits perfectly with modern web frameworks such as Next.js, Nuxt, Angular, Vue, Gatsby, and more.

In this section, we will provide a step-by-step guide on how to run serverless applications with Vercel. We will cover the process of setting up a Vercel account, deploying a serverless function, and testing the function.

Setting Up a Vercel Account

The first step to running serverless applications with Vercel is setting up an account. Here’s how to do it:

- Visit the Vercel website and click on the “Sign Up” button at the top right corner of the page.

- You can sign up using your GitHub, GitLab, or Bitbucket account. Click on the respective button and authorize Vercel to access your account.

- Once you’ve authorized Vercel, you’ll be redirected to the Vercel dashboard. This is where you’ll manage your serverless applications.

Deploying a Serverless Function with Vercel

After setting up your Vercel account, the next step is to deploy a serverless function. Here’s a step-by-step guide on how to do it:

-

First, install the Vercel CLI (Command Line Interface) on your local machine. You can do this by running the following command in your terminal:

npm install -g vercel -

Next, create a new directory for your project and navigate into it:

mkdir my-vercel-function && cd my-vercel-function -

In your project directory, create a new file that will contain your serverless function. You can do this by running the following command:

touch api/hello.js -

Open the

hello.jsfile in your favorite text editor and add the following code:module.exports = (req, res) => { res.status(200).send("Hello, from Vercel!"); }; -

Now, deploy your serverless function by running the following command in your terminal:

vercel

Vercel will automatically detect the serverless function and deploy it to a serverless platform. Once the deployment is complete, Vercel will provide you with a unique URL to access your function.

Testing the Function

After you’ve deployed your serverless function, you can test it by sending an HTTP request to the function’s URL. You can do this using a tool like curl or Postman. Here’s how to do it with curl:

-

Open your terminal and run the following command:

curl https://my-vercel-function.vercel.app/api/hello

Replace https://my-vercel-function.vercel.app/api/hello with the URL provided by Vercel after deployment.

- If everything is set up correctly, you should see the message “Hello, from Vercel!” in your terminal.

In conclusion, running serverless applications with Vercel is a straightforward process that involves setting up a Vercel account, deploying a serverless function, and testing the function. Vercel provides a simple and intuitive platform for deploying and managing serverless applications, making it a great choice for developers looking to leverage the benefits of serverless architecture.

Conclusion

In this blog post, we have explored the world of serverless architecture, from its definition and benefits to its evolution, fundamental concepts, and how it works. We’ve also looked at the key components of a serverless stack, best practices in serverless architecture, and some of the common pitfalls and challenges. Furthermore, we’ve discussed a variety of use cases where serverless architecture can be applied effectively, and walked through a step-by-step guide on how to run serverless applications with Vercel.

Serverless architecture offers a revolutionary approach to developing and deploying applications. It frees developers from the burden of managing servers and infrastructure, allowing them to focus on writing code and delivering business value. With its automatic scalability, pay-per-use pricing model, and faster time to market, serverless architecture provides a highly attractive option for modern application development.

Despite the challenges and pitfalls, such as cold start latency, managing state, and dealing with concurrency limits, serverless architecture continues to evolve and mature, offering solutions and best practices to mitigate these issues.

The use cases for serverless architecture are vast and varied, ranging from real-time file processing and IoT applications to event-driven applications. And with platforms like Vercel, deploying and managing serverless applications has never been easier.

In conclusion, serverless architecture offers a powerful, scalable, and cost-effective solution for modern application development. Whether you’re building a simple web app or a complex microservices architecture, serverless can provide the flexibility, efficiency, and scalability you need. So why not give it a try? Start exploring the world of serverless today and see how it can transform your application development process.